Model Context Protocol (MCP) — bridge between AI and the world of data

.png)

Large language models impress with their general knowledge — until you ask a company-specific question. In practice, large language models (LLMs) quickly reach their limits because they lack access to internal data and tools. Valuable knowledge lies in data silos such as wikis, ticket systems or databases, which remain inaccessible to the chatbot. An AI assistant may be able to explain how to create a support ticket, but without a connection to the company's own interface, he cannot create a specific ticket with real customer data.

The result: The full potential of AI remains unused because decisive data context is missing. This is exactly where the Model Context Protocol (MCP), which addresses this problem, comes in.

This open standard, initiated by Anthropic at the end of 2024 and now supported by most leading AI providers, defines a uniform way in which AI applications can access various data sources and tools.

Instead of developing separate interfaces for each system, it will be sufficient in future to implement a single standardized protocol. This article highlights the weaknesses of previous stand-alone solutions, explains how MCP fundamentally overcomes them, and shows how companies can use the standard to exploit the full potential of their AI investments.

Status quo & challenges

Without MCP, AI solutions must be connected separately for each individual data source. This results in a patchwork of scripts and plugins for databases, file systems, and APIs. Such custom integrations are time-consuming, error-prone, and difficult to maintain.

The consequences are isolated islands of information and permanent loss of context. Business knowledge from Jira, Confluence or CRM systems remains inaccessible to AI assistants, which results in inefficient workflows and an increased risk of errors.

The core of the problem lies in the multitude of incompatible interfaces. Each data source comes with its own authentication, data formats, and error handling. As soon as an API changes, the integration easily breaks down and development teams have to make improvements again.

In the end, AI models are denied access to current company data. They are limited to their static training knowledge and therefore quickly reach limits in productive use.

Technical deep dive

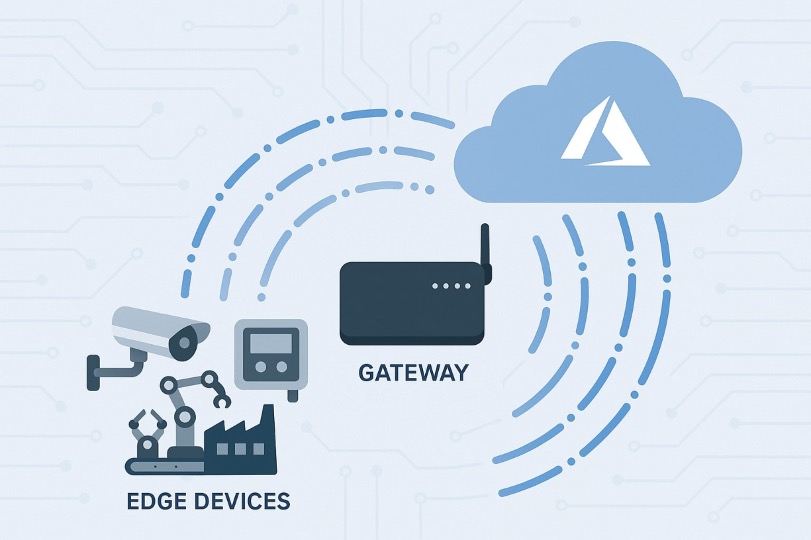

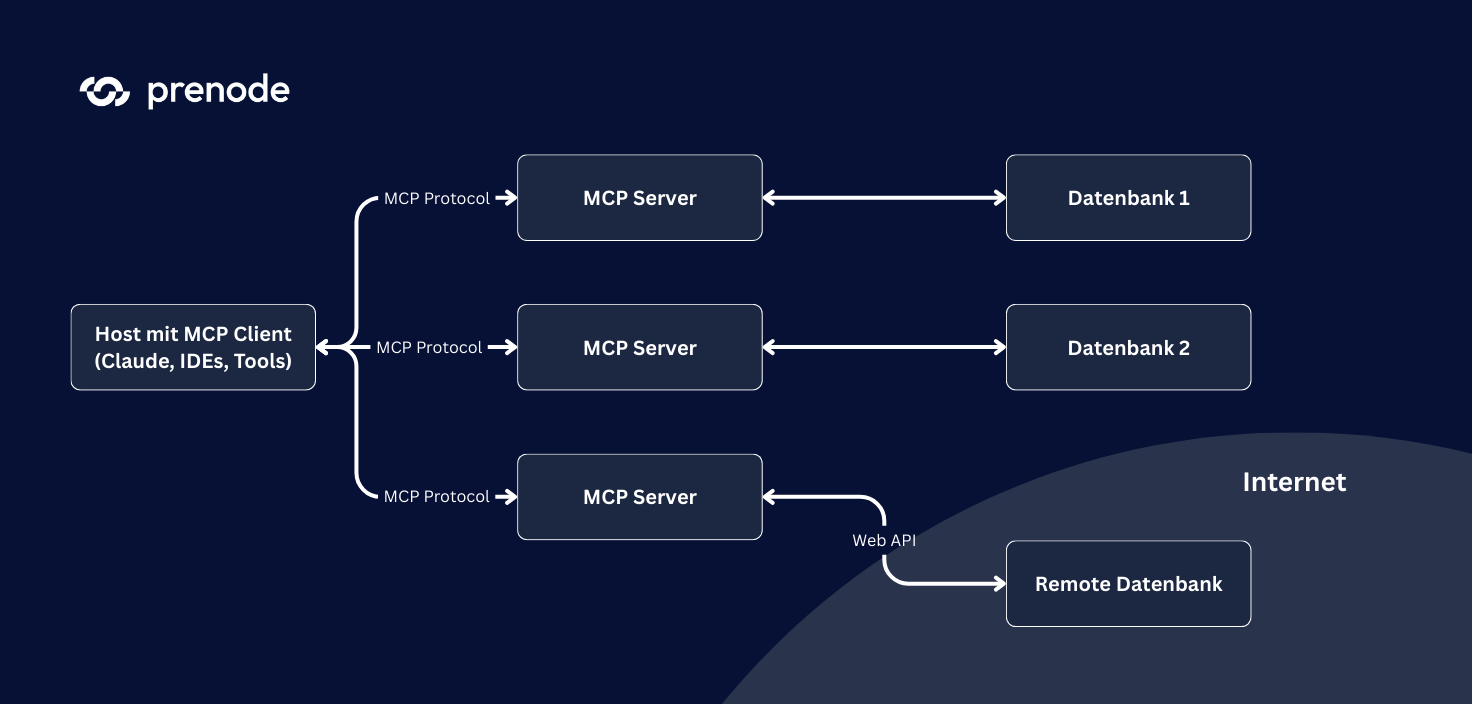

MCP is based on a clearly defined client-server model and extends proven web technologies with AI-specific functions. The architecture follows the principle of separation of concerns and enables modular, scalable integrations.

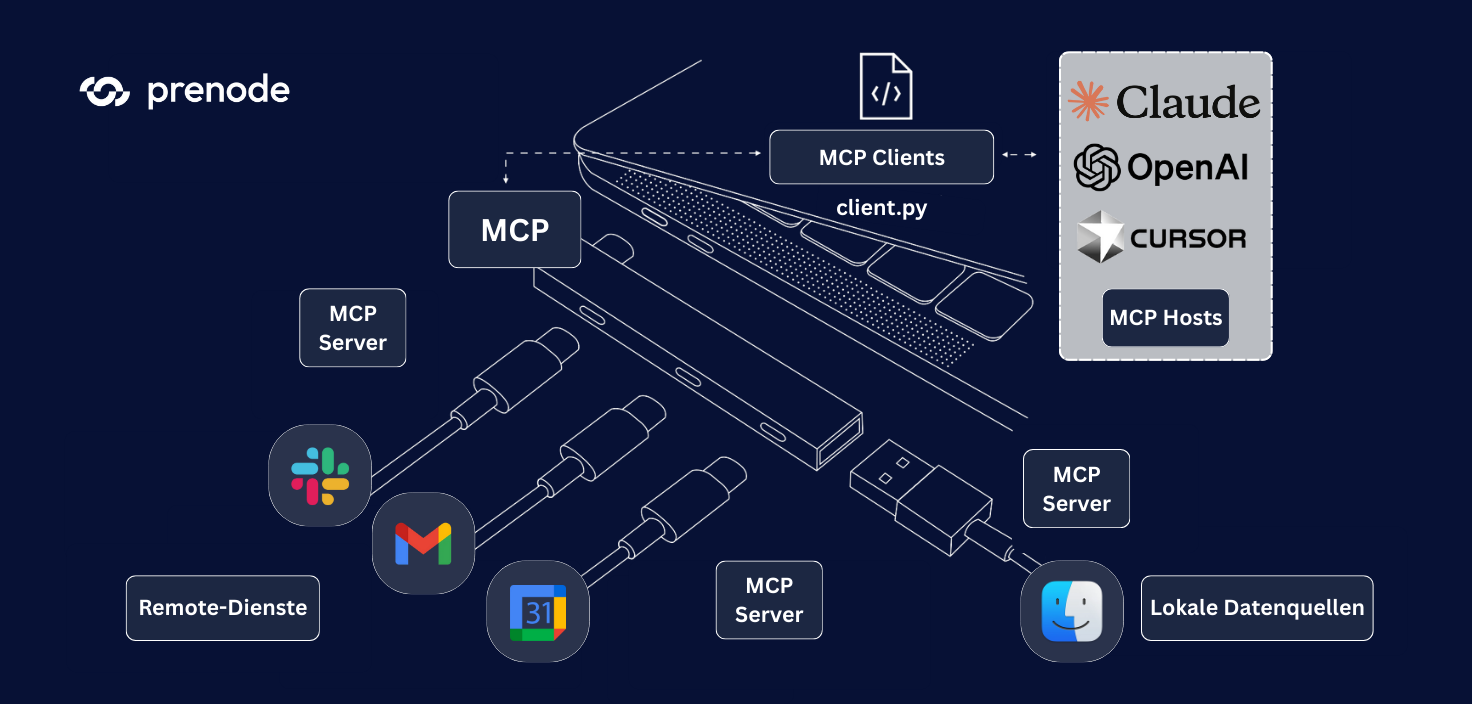

MCP host

The MCP host acts as a central control unit and usually runs within the AI application (e.g. IDE plug-in, chatbot environment, or desktop application). It manages one or more client instances and monitors both security aspects and access rights. The host ensures that an AI client only accesses resources for which explicit permissions exist.

It also integrates policy management, session management, and context coordination into a consistent architectural component. In enterprise environments, the host can take on additional functions such as logging, monitoring and compliance monitoring, thus ensuring secure and traceable operation.

MCP client

An MCP client instance represents exactly one AI session with an MCP server. It forms the bridge between the AI application and an external tool and opens a conditional communication channel (session) for this purpose. Available capabilities are negotiated via this channel (Capability Negotiation).

Each AI agent instance runs in isolation in its own client environment, preventing unauthorized access between different agents. The client is often part of a software development kit (SDK) — such as for Python, TypeScript, or Go — which abstracts protocol details and provides developers with an easy-to-use API.

MCP servers

An MCP server acts as a standardized adapter for external resources — such as local components such as file systems or databases, as well as remote services such as cloud CRM systems or APIs. It provides these resources in the form of defined “tools” and “resources” via MCP and declares clearly specified functions.

All servers follow the same protocol communication and format rules, which means that they can be addressed by any MCP client. A single server can bundle several thematically related functions — such as all operations of a CRM system or all file system accesses — and thus be integrated into the overall architecture as a modular extension module.

Communication and protocol

MCP uses JSON-RPC 2.0 as a standardized message format for exchanging commands and queries between client and server. Communication takes place via persistent connections, which enables interactive and stateful sessions — in contrast to classic, stateless REST APIs.

For local MCP servers, the connection is usually implemented via stdin/stdout pipes, while remote servers are typically connected via WebSockets or server-sent events. A central feature is dynamic service recognition: As soon as a new MCP server is active, a client can automatically discover it and read out its capabilities using a standardized manifest.

Safety and isolation

MCP relies on several complementary levels of security. On the client side, the host ensures sandboxing, rights management, and consistent separation of sessions. Additional mechanisms such as authentication and authorization can be implemented on the server side. Communication between client and server can be encrypted, which also secures the exchange of sensitive data.

Each session is strictly isolated so that there can be no unauthorized access between different agents or resources. This architecture makes it possible to securely integrate even business-critical and confidential corporate resources via MCP.

This architecture significantly reduces integration complexity: Instead of a complex N×M link (each client individually with each tool), a clear N+M structure is sufficient — every client speaks MCP, every tool speaks MCP. The result is modular and expandable AI systems in which new capabilities can be seamlessly integrated simply by adding a server.

MCP is thus establishing a sustainable standard that promotes interoperability, ensures security and significantly reduces development costs.

Implementation challenges

Despite its advantages, MCP poses practical hurdles when implemented in existing process landscapes.

Data availability is a central issue: MCP can only access digitized and structured information. Many organizations must therefore first ensure that relevant data sources are identified and accessible. There is also the question of data quality. MCP does not correct incorrect or outdated information, which is why consistent data maintenance and the removal of duplicates are necessary in advance. The use of retrieval augmented generation (RAG) can improve context quality.

Integration into existing processes is also a challenge. MCP replaces point-to-point interfaces with an integration layer, which in enterprise environments often requires central MCP servers and clear embedding into the existing IT infrastructure.

Safety also plays a crucial role. Although MCP has basic security mechanisms, it relies heavily on the surrounding infrastructure. Established standards such as OAuth and TLS are used for web-based connections, while authentication and rights management must be supplemented with existing systems or policies in local scenarios. After all, performance and reliability are important factors. MCP servers cause additional latencies and must therefore be operated with high performance and availability — including load balancing, monitoring and fallback mechanisms.

Benefits of MCP

The Model Context Protocol (MCP) provides a standardized framework for connecting AI systems to their environment and solves key integration problems:

Unified integration

MCP acts as a universal interface: Once implemented in the AI client, a wide variety of data sources can be connected. Instead of individual API integrations, a single protocol is sufficient. New systems can be added using the plug and play principle without rebuilding the architecture.

Dynamic context extension

MCP enables persistent two-way connections between model and data source. This allows LLMs to continuously retrieve information and carry out actions — such as researching in a knowledge database, comparing results with customer data and opening a support ticket directly.

Higher response quality

Access to up-to-date data significantly increases the accuracy and relevance of answers. Models no longer rely solely on static training knowledge, but can use live data sources.

Open community ecosystem

As an open standard, MCP promotes a growing ecosystem of extensions. Hundreds of community connectors were already available at the beginning of 2025 — from GitHub to Slack to database servers. Companies benefit from prefabricated components and significantly reduce integration times.

Scalability and future-proofing

Decoupling tools makes architectures more flexible and robust. New requirements can be met by adding an MCP server. Since MCP is model-agnostic, various LLM providers can be used without having to rebuild the integration layer.

Best practice examples

In order to make the benefits of MCP tangible, the following are two practical examples from different fields of application. They show how specific problems can be solved and added value can be created:

1. Knowledge management — Jira and Confluence seamlessly in AI assistance

A global company uses Jira for task tracking and Confluence as a knowledge base. Employees lose a lot of time because they laboriously collect information between tickets, wikis and emails for meetings or decisions. Context from these tools only enters AI systems manually, leading to delays and gaps.

solution: An MCP server for Jira and Confluence provides the data consistently, while an AI assistant is connected as an MCP client. The assistant can directly access tickets and knowledge pages, make queries or create new processes — secured by existing OAuth and authorization systems.

scores: Employees ask questions in natural language and get immediate answers without having to switch applications. Fewer context changes enable faster decisions and more focus on value-adding work. Even complex multi-step actions, such as creating multiple linked tickets at the same time, can be completed in one step.

2. Customer service assistant — AI agent with 360° view of customer data

An e-commerce provider processes inquiries via many channels. Service employees must manually search CRM, order history, knowledge database and ticket system. This costs time, delays answers and leads to inconsistencies.

solution: All relevant systems are connected via MCP and integrated into a customer support assistant. An AI chatbot can directly retrieve customer data, order status and product information, and create or update a ticket as needed — all within a single dialog in natural language.

scores: Customer service is becoming faster and more precise. For example, the assistant takes into account that a customer has already made multiple inquiries about the same problem and reacts accordingly. Routine questions are answered automatically, while employees focus on complex cases. New channels — such as social media — can be easily integrated by adding another MCP server.

conclusion

The Model Context Protocol is a decisive step for the development of AI systems. It standardizes the connection to external tools, reduces integration costs and enables scalable, powerful applications.

The benefits are obvious: faster integrations, better response quality, and lower maintenance costs. At the same time, an open ecosystem of connectors is growing, opening up new use cases.

Companies should start gradually through pilot projects, combined with investments in data quality and security. If you act early, you can secure competitive advantages and future-proof your AI landscape.

Share this article

.png)

%20(3000%20x%202000%20px).png)