AI in sales: Automated tender analysis with LLMs

Public tenders play a major economic role for many companies — in Germany, the volume of public contracts awarded in 2023 was around 123.5 billion euros [https://www.destatis.de/DE/Themen/Staat/Oeffentliche-Finanzen/Vergabestatistik/_inhalt.html].

At the same time, tendering processes on the bidder side are extremely labor-intensive and prone to errors. Each tender dossier often comprises several hundred pages in various forms (e.g. PDF, Word, Excel, scanned documents...) without a uniform layout.

Important deadlines, conditions of participation and technical specifications are spread over many annexes. Without automated support, specialists often invest hours or even days in the initial review of each call for tenders. By closely linking formal requirements (correct forms, submission deadlines) with tough exclusion criteria, even a small mistake will result in the exclusion of an offer. Manual processing ties up valuable personnel, leads to “tender fatigue” and high opportunity costs. Against this background, solutions are needed that efficiency and precision improve tendering management while ensuring compliance with all compliance requirements.

Challenges in the tendering process

- Heterogeneous documents: Tender documents come in a wide variety of formats (PDF, Word, images) and structures. Each awarding authority designs its documents individually, which makes automatic parsing and normalization difficult. Robust preprocessing (Optical Character Recognition [OCR] for scanned documents) is necessary to actually capture the text and break it down into logical sections (“chunks”).

- High manual effort: Without AI support, teams often manually evaluate a tender for hours. According to a practical report, the review and extraction of decision-relevant information previously took 2 hours to several days per call for proposals. The result is long lead times and a falling supply rate.

- Strict compliance: Public procurement procedures require precise compliance with all requirements. Missing evidence or breaches of deadlines lead directly to exclusion. For an AI system, this means that every information generated (e.g. submission dates, required certificates) must be factually correct and verifiable. Hallucinated or inaccurate answers would be disastrous here, as they could lead directly to the bidder's damage.

- Complex knowledge management: The preparation of offers is cross-departmental (technology, law, finance, sales) and often requires recourse to previous knowledge. In many companies, however, previous offerings and compliance information are not systematically archived or reused. As a result, knowledge is not built up effectively and every call for proposals is largely reviewed from scratch.

These factors make it clear that the aim is not only to save time, but above all to achieve reliable results. The AI solution must precisely extract relevant facts from the original documents and, if possible, substantiate its answers with sources in order to convince human specialist users.

Solution: Use of LLMs and RAG

This is where Large Language Models (LLMs) come in. Modern LLMs can understand and process large amounts of text. You can identify relevant passages, answer questions, or create summaries. What is decisive, however, is how the model is adapted to the knowledge from the tender documents. Standard LLMs work with the knowledge they have learned in training, which has a fixed time cut-off and limited factual knowledge. This is not enough for factual tasks such as tender analysis.

Retrieval-Augmented Generation (RAG) is a hybrid architectural style that starts right here. At RAG, a pre-trained LLM is supplemented with external, up-to-date sources at runtime. Specifically, a semantic search index is first built up from the tender documents (embedding + vector database). In response to a user request (e.g. “Which certifications are required? “) A retriever searches this index and delivers the most relevant sections of text. Only these document segments are handed over to the LLM, which generates the answer on their basis. As a result, the model is no longer based only on its internal “parametric memory,” but on explicitly provided facts.

Die Benefits of the RAG Approach The following are central to tender analyses:

- Fact-based & transparency: Since the LLM constructs its answers from the retrieved documents, the information is anchored in the sources. The model can cite relevant passages of text as evidence. RAG systems thus reduce the error and invention rate and thus reduce the risk of hallucinations. For users, this means that they can immediately cross-check the AI response, which strengthens trust in the system.

- Timeliness and dynamism: The knowledge base (here: the tender documents) remains independent of the model and can be constantly updated. New documents are simply indexed without having to retrain the LLM. This ensures that the system always works with the latest data. In contrast, simply fine-tuning the LLM to the tender batch would be expensive and impractical, as every award process brings new data.

- Cost and calculation costs: Because no complex training runs are necessary, the effort is shifted to one-time indexing and quick retrieval. This is usually more cost-effective than continuously retraining large models. Another advantage: Sensitive data remains in the company's own database and is only inserted into the prompt when required — unlike cloud APIs, where every prompt element is sent externally. Many companies therefore rely on private LLM instances or on-premise solutions to maintain full control over confidential tender content.

RAG therefore uses the strengths of both worlds — the generative language ability of LLMs and the reliable source of information from a special document body — and thus offers the necessary factual basis and traceability for the tender analysis. The technology enables fact-based, verifiable answers and thus greater security throughout the process.

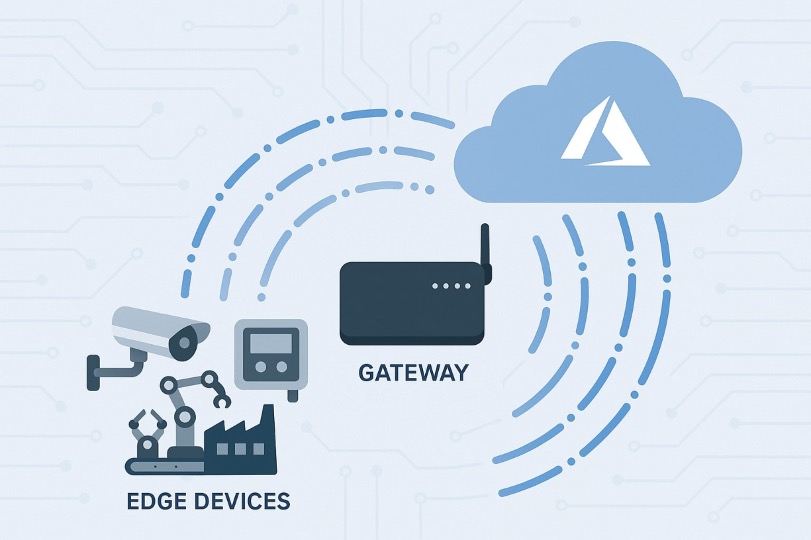

Technical architecture of a RAG system

A practical RAG system for tender management is typically divided into three main components: Ingestion & Preprocessing, Indexing & Retrieval, and Generation & Synthesis. The following steps outline the process:

- Document ingestion and preprocessing: Incoming tender documents (PDF, Word, image files) are processed into text. OCR processes extract content from scans, parallel parsers disassemble Word or PDF documents. The text is then segmented into meaningful chunks (e.g. paragraph or chapter level) to control the granularity of the search. Metadata (document name, section title, page number) is assigned to each chunk, which is later used for source references. This preprocessing ensures that structured information is retained and that the subsequent search can precisely access relevant sections.

- Indexing & retrieval: Each text chunk is converted into a semantic vector by an embedding encoder (such as a sentence transformer). These vectors are stored in a specialized vector database (e.g. Pinecone, Weaviate, or Milvus). When retrieved, the system converts the user request into a query vector and searches for the most semantically similar chunks. A hybrid search method is often used: A dense vector search for semantic relevance is combined with classic keyword searches (e.g. BM25) to reliably find technical terms or exact terms. The top-K chunks found can then be re-sorted by re-ranking to maximize the truly relevant context for the LLM.

- Generation & preparation of results: The retrieved document excerpts are embedded in a prompt for the LLM with the user question. Using detailed prompt engineering, the model is guided to derive the answer exclusively from the delivered texts. The LLM synthesizes the information from multiple chunks and creates a concise answer. It can target various output formats:

- Summary of the most important tender contents (scope of services, deadlines, eligibility criteria).

- Structured extraction in the form of tables or JSON (e.g. checklists with requirements, deadlines, required evidence).

- Question-answer mode: Using a chat interface, the user can ask specific questions about the documents later on. The system searches again for suitable passages of text and formulates an answer with reference to the source.

In all cases, the references to the sources are retained. Using the metadata of each text chunk, the system can specify page numbers or document titles, for example. For example, the user can receive “See Section 4.2 in Document X” as proof. This traceability is essential for audits and internal reviews.

The entire architecture can be integrated into corporate IT via standardized interfaces (e.g. via REST APIs). The AI-based analysis is thus incorporated as a module into existing workflows — from the initial risk assessment to the preparation of offers.

Use case

An IT system house that regularly participates in complex, public tenders was confronted with time-consuming and inefficient manual processing of tender documents. The manual initial review and preliminary review took an average of 8 hours per tender and caused high costs due to late recognition of exclusion criteria.

To overcome these challenges, a RAG-based system was introduced. This automates the processing of tenders, extracts relevant information and creates concise summaries in a short period of time to support decisions.

The implementation results showed significant improvements: The processing time for the initial review was reduced by around 59%. As a result, more tenders could be processed without increasing staff. In addition, users reported a noticeable relief and were able to focus more on the strategic quality of the offerings.

Implementation and challenges

When introducing a RAG solution, it is important to consider not only the technology but also the organizational framework:

- Data Governance and Security: Tender documents often contain confidential information (such as price calculations or contract data). A secure operating model is therefore mandatory. Many companies prefer private cloud**** or on-premise deployments of LLMs and the vector database to avoid data leaks. Access rights must be managed consistently across the entire system so that each user can only see the documents to which they are entitled. The RAG architecture has an advantage here: The sensitive tender data remains in protected storage and is only passed on as a context chunk.

- System integration: The RAG tool should fit into existing processes. Common integration points include e-procurement platforms or document management systems (DMS). Using APIs, you can automatically feed tender documents into the preprocessing pool and deliver the resulting checklists or reports back to the workplaces of the specialist departments. This ensures that there is no duplication of work and that all departments work with the same information.

- Infrastructure & Scale: Modern vector databases support horizontal expansion (sharding, distributed search) to provide quick answers even with millions of documents. For on-premises operation, companies must provide sufficient resources (memory, GPUs for LLM). Cloud providers offer managed services (e.g. vector databases, LLM endpoints), which lowers the initial hurdle. Caching frequent queries or tiering data by access frequency can also help control costs.

- Maintenance and monitoring: A RAG system requires continuous maintenance. For example, the index update should be automated when new tenders are received. Performance and quality can be monitored through monitoring metrics (throughput, response times, extraction accuracy) and user feedback. Regular updates (e.g. better embedding models, newer LLM versions) ensure that the system keeps pace with current progress.

Conclusion

The combination of LLM and retrieval-augmented generation is an effective approach to make tendering processes in industrial companies more efficient. By using a RAG system, the initial audit can in fact be comprehensively automated: Deadlines, requirements and conditions are reliably extracted, sources are specified and comprehensible analyses are created on this basis. Practical implementations show significant time savings and increased offering capacity without losing loyalty to compliance.

Companies that now invest in this technology are creating a competitive advantage. With the same resources, you can process more tenders and exclude at an early stage which projects involve an unnecessary amount of effort. At the same time, the clear traceability of AI results increases experts' confidence in the solution. In our opinion, RAG — compared to alternatives such as complex fine-tuning — is currently the technologically superior architecture for automated tender analysis.

👉🏽 Would you like to learn more about AI in sales? In our live event (including a data center tour), we'll demonstrate how intelligent AI solutions can optimize, for example, the processing of tenders.

Register now: https://www.telemaxx.de/ueber-uns/aktuelles/veranstaltungen/25072025-ki-anwendungen-auf-telemaxx-opencloud-mit-rechenzentrumsfuehrung

Share this article

.png)

%20(3000%20x%202000%20px).png)

.png)