Explainable AI in manufacturing

%20(3000%20x%202000%20px).png)

AI applications are already being used regularly in production, from predictive maintenance to automatic quality testing. But in practice, many projects reach an invisible limit: a lack of trust in the models.

If an algorithm works precisely but is opaque, specialists are reluctant to implement its recommendations. The result: Results are checked manually, decisions are delayed, efficiency gains do not occur.

This is exactly where Explainable AI (XAI) comes in. The aim is not higher model accuracy, but more traceability and acceptance. Explainable AI makes it possible to interpret algorithmic decisions technically and professionally and thus creates a common basis for humans and machines.

Status quo & challenges

The problem is not so much technical but organizational. If specialists do not understand why, according to the model, a machine should fail soon, the result is usually questioned and ignored when in doubt.

Typical effects in practice:

- Predictive maintenance: The system reports an impending warehouse failure, but the mechanism behind it remains unclear. Maintenance teams react cautiously or not at all, with potentially expensive shutdowns as a result.

- Visual quality inspection: A neural network classifies a component as faulty, but provides no visual explanation. Employees check again, sometimes with the opposite result. This reduces both throughput and trust in the system.

- Process optimization: Parameter control models show correlations, but no causal relationships. Without explanations, there is no basis for implementing recommendations in practice.

The Bottleneck lies in the fact that it can be explained and integrated into everyday working life. Companies that start here increase acceptance and efficiency through less audit work and incorrect decisions.

Using modern XAI solutions

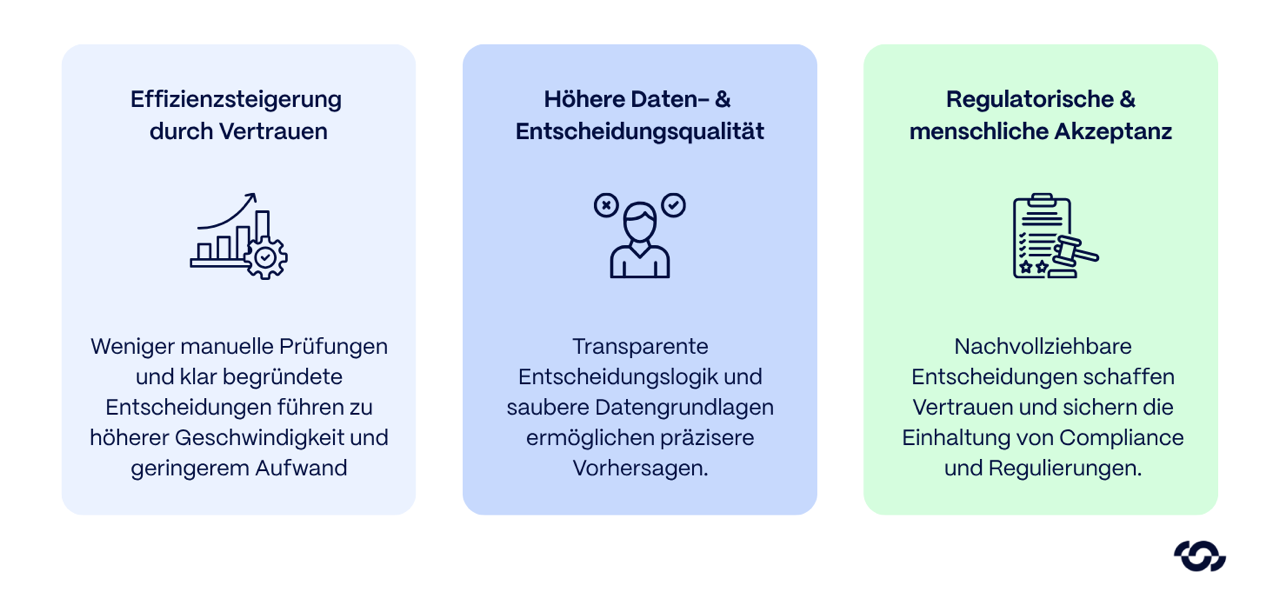

The key advancement of explainable AI is that it turns black box models into verifiable tools. While classic AI often only delivers “what,” XAI also explains the “why.” This creates measurable benefits in three key areas of industrial practice.

1. Increasing efficiency through trust

In manufacturing environments, decisions are rarely binary. If an AI prediction is incomprehensible, it is usually checked manually — a significant time factor.

XAI reduces this additional effort by providing reasons for decisions.

2. Higher data and decision quality

XAI creates transparency about the influencing factors in the model. As a result, systematic errors, faulty sensors or unrepresentative training data can be identified more quickly.

The result: clean data bases, comprehensible decision logic and more precise forecasts.

3. Increased automation through regulatory and human acceptance

Explainability is not only a technical requirement, but increasingly also a legal requirement.

With stricter requirements, companies must increasingly prove that decisions can be comprehensibly documented for certain high-risk applications.

XAI mechanisms provide the technical basis for this: automatic explanation logs, audit trails and visualizations of decision-making processes.

But even beyond regulatory requirements, transparent systems are being used more frequently.

Technical Deep Dive: XAI Methods and Techniques

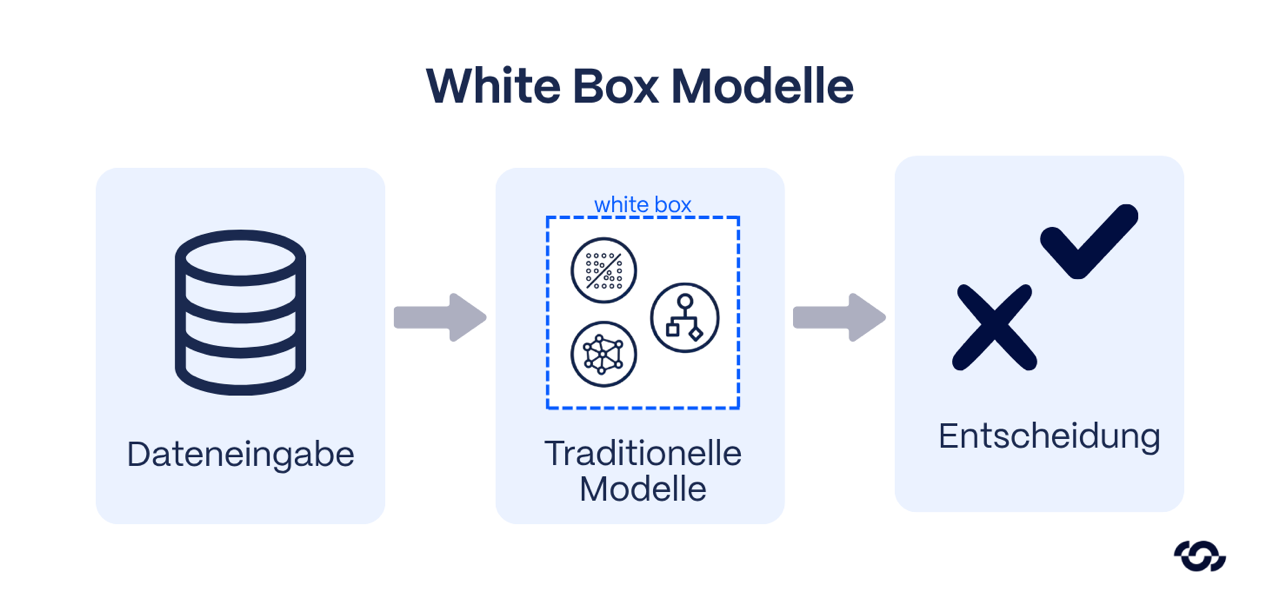

Explainable AI can basically be broken down to two approaches:

(1) Intrinsically explainable models whose structure is self-explanatory

(2) Subsequent (post-hoc) procedures that make complex models interpretable.

In industrial practice, both variants are used — depending on the application, data situation and desired model complexity.

Intrinsically explainable models

With intrinsically explainable models, the logic is immediately comprehensible.

Classic examples include decision trees, linear regression models, and rule-based systems. Each decision path can be compared directly with technical knowledge.

Such models are easy to validate and often meet regulatory requirements. Their disadvantage: limited expressiveness with high-dimensional sensor data or nonlinear relationships. In practice, they are therefore often used as a baseline or reference model to test more complex black box approaches.

Post hoc explanations for black box models

More powerful models — in particular deep neural networks or ensemble methods — require additional explanatory mechanisms. This is where post-hoc methods come into play, which make decisions interpretable retrospectively.

Feature Importance & SHAP

Methods such as SHAP (Shapley Additive Explanations) assign a quantitative influence on the prediction to each input characteristic.

In manufacturing, this means that you can see exactly how much temperature, torque, or vibration frequency contribute to failure prediction.

SHAP is based on game theory concepts, i.e. provides “fair” contribution estimates per feature.

Local Surrogate Models (LIME)

LIME (Local Interpretable Model-Agnostic Explanations) explains individual predictions using a simple replacement model.

Example: For a neural network that classifies a workpiece as a defect, LIME creates a local linear regression that imitates that one data point. This makes it possible to understand which variables were decisive in this situation.

LIME is particularly suitable for interactive analyses — for example in dashboards where engineers “Why exactly this part? “I want to understand.

Contrastive and counterfactual explanations

These methods answer the question: “What would have to be different for the model to decide differently? ”

A counterfactual could show, for example, that a slightly lower vibration value would have prevented the alarm.

This is particularly valuable for engineers because such explanations enable direct recommendations for action — they not only show causes, but also levers.

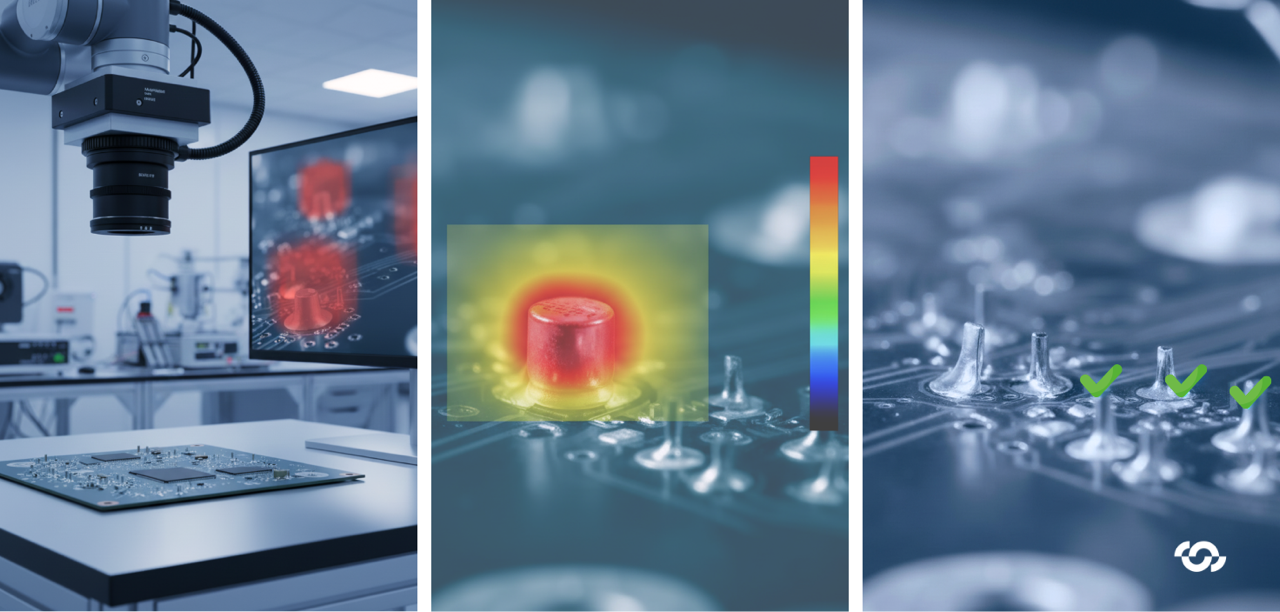

Visual heat maps & gradient-based methods

In image processing — for example in optical quality control — visual explanations are crucial.

Techniques such as Grad-CAM or Integrated Gradients mark areas of the image that have contributed to the decision. On a circuit board, it is possible to highlight the regions that the model has identified as faulty.

The advantage: The explanation is visually intuitive and can be easily compared with specialist knowledge.

Global vs. local explanations

- Local explanations (e.g. LIME, SHAP) show why an individual decision was made that way.

- Global explanations analyze general model behavior across many decisions.

A global model audit can reveal, for example, that a network reacts disproportionately to a specific sensor channel — an indication of potential miscalibration or data bias. Both perspectives complement each other: locally for individual transparency, globally for model validation.

Practical recommendation

A hybrid approach has proven effective in industrial projects:

- intrinsically explainable models in early project phases or for heavily regulated applications,

- post-hoc explanations for complex production models,

- Combined with role-dependent processing — from a simple traffic light signal for workers to a SHAP diagram for data scientists.

The decisive point is not the method, but the context-appropriate use. A technically perfect explanation is of little use if it is incomprehensible to the user.

Examples of best practice from industry

Practical examples show most clearly how Explainable AI creates real added value in manufacturing. The following cases come from industrial projects and pilot applications where explainable models led to higher process reliability, better data quality and measurable cost savings.

Use Case 1: Visual Quality Inspection at an Electronics Manufacturer

Starting position:

A manufacturer of electronic components used deep learning models to automatically test solder joints on circuit boards. Although the detection rate was high, auditors distrusted the system — it occasionally sorted out intact parts without explanation.

Solution:

The data science team integrated into XAI modules based on Grad-CAM (gradient-weighted class activation mapping). This was used to generate heat maps for each analyzed image, which visually highlighted the relevant areas. This allowed inspectors to immediately identify which solder joint the model suspected a fault.

upshot:

- Significantly higher trust in AI output — professionals understood Why A part was considered faulty.

- Measurable reduction in duplicate tests.

- The misclassification rate fell significantly as real errors and false alarms became easier to distinguish.

The combination of model performance and explainability led to measurably higher test reliability and lower quality costs.

Use Case 2: Data Validation and Process Monitoring in Automotive Manufacturing

Starting position:

An automotive supplier used machine learning models to optimize process parameters in plastics processing. After several model updates, it was noticed that predictions were becoming increasingly implausible — with no obvious cause.

Solution:

Using a global XAI approach (combination of SHAP Summary Plots and Partial Dependence Analysis), it was shown that a sensor feature with systematic drift had a strong influence on the model. The cause was a faulty temperature sensor.

upshot:

- Early detection of a sensor fault that would have gone undetected for months without XAI

- Improved model stability after retraining with purified data.

- Integration of XAI diagnostics into the existing MES for continuous data validation.

XAI not only provides explanations here, but is also used for quality monitoring.

Findings from practice

Several overarching lessons can be derived from the projects:

- Explainability increases usage rate: Models whose decisions are comprehensible are used more frequently and consistently by specialists.

- XAI improves data quality: Explanations reveal systematic anomalies — often earlier than traditional monitoring systems.

- Transparency lowers risks: Comprehensible decision logic reduces false alarms and unnecessary interventions.

- Technical implementation is feasible: With today's frameworks (e.g. Captum, SHAP, Alibi, TensorFlow Explain), XAI can be implemented without massive additional effort — integration into existing processes is decisive, not just the model side.

Industry experience shows that Explainable AI is not a theoretical concept, but a business and technically relevant factor. Where classic AI systems often fail because of the “why,” XAI enables measurable improvements — in terms of reliability, efficiency and trust in data-driven decisions.

Conclusion

In industrial practice, explainable AI is evolving from a field of research into a clear productivity and trust factor.

Especially in manufacturing, where safety, reliability and quality are crucial, AI can only develop its potential if its decisions are comprehensible.

Explainable AI bridges the gap between complex models and human expertise. It makes it visible why a system is making a decision and allows specialists to review, confirm or specifically correct this decision.

Companies that take this step early benefit in two ways:

- in the short term due to higher process stability and faster decision cycles,

- In the long term through compliance security, more robust models and a data-driven corporate culture.

Technically, implementation is no longer an obstacle today. The key challenge is to systematically embed XAI into data and process landscapes instead of viewing it as an isolated analysis tool.

Share this article

.png)

.png)